18 Dec

Across trust & safety, a group of industry leaders focus on ethics, training, transparency, and collaboration — creating a foundation for safer, more resilient digital spaces.

Even though community management and moderation have been around for decades, calling the industry “Trust and Safety” is relatively new. Additionally, artificial intelligence, live content, and tightening regulations have completely reshaped what it means to build positive online communities. On platforms where millions of players interact in real time, trust & safety is pivotal for user protection and brand reputation.

Quick Takeaways

- TSPA and the Trust & Safety Foundation define the professional standards for moderation teams.

- DTSP and Tech Coalition lead cross-company accountability and framework development.

- Thorn, NCMEC, and IWF are the global backbone for child safety and exploitation prevention.

- NewsGuard and The Trust Project strengthen media integrity and brand safety.

- Multiple countries and regions guide regulatory innovation and transparency.

Professional Standards and Community Leadership

Building the “People Side” of Trust & Safety

The Trust & Safety Professionals Association (TSPA) and Trust & Safety Foundation (TS Foundation) form the foundation of moderation as a modern profession. They provide online and in-person training and conferences, establish ethical guidelines, and offer mental health support for moderators (roles often overlooked despite being critical to healthy communities).

TSPA connects professionals through shared resources and mentorship, while the TS Foundation funds standardization and research projects. Together, they define the human infrastructure of trust and safety, making sure every technical or policy advancement stays grounded in ethical practice.

Orgs Extending Expertise

Other groups like Thriving in Games Group (TIGG) and Roost focus on expanding certain aspects of Trust & Safety expertise. TIGG connects large and small studios sharing prosocial design methodology to help the industry move past just thinking of safety. Roost helps newer and seasoned developers alike apply open source trust and safety tools and strategies to help build or augment their existing infrastructure.

Recent TIGG talk featuring GGWP

Industry Collaboration and Shared Frameworks

Defining Accountability Across Companies

The Digital Trust & Safety Partnership (DTSP) and Tech Coalition have become anchors for organizational accountability.

- DTSP sets operational benchmarks for detecting, responding to, and preventing online harm. Its standards help companies measure performance and communicate transparency to users and regulators.

- Tech Coalition unites major platforms to fight child exploitation through shared technology and data exchange.

Why Collaborate?

As gaming communities overlap with live content and social platforms, shared frameworks are important. These organizations demonstrate that collaboration, not competition, encourages higher standards, building safer digital ecosystems across industries.

Child Safety and Exploitation Prevention

The Backbone of Global Protection

Child protection demands the most diligence out of any other content moderation category. Organizations like Thorn, NCMEC (National Center for Missing & Exploited Children), and IWF (Internet Watch Foundation) lead this effort worldwide.

- Thorn develops technology to detect and remove child sexual abuse material.

- NCMEC acts as a centralized reporting hub connecting platforms and law enforcement.

- IWF coordinates international takedown and prevention strategies.

These platforms define the ethical baseline for digital safety. Their collaboration makes sure content moderation systems go beyond detection- supporting survivors, aligning with global law enforcement, and guiding industry-wide best practices.

Expanding Coordination

INHOPE, a network of international hotlines, also strengthens this system by managing cross-border responses. These organizations collectively create the child safety backbone of trust and safety operations.

Integrity, Misinformation, and Media Literacy

Protecting Information Reliability

In any online platform, misinformation and manipulation threaten user trust. Organizations like NewsGuard, The Trust Project, and Common Sense Media work to reinforce what it means to be reliable and transparent.

- NewsGuard rates information sources by credibility and transparency, helping advertisers and platforms assess risk.

- The Trust Project partners with publishers to label sources clearly and promote accurate attribution.

- Common Sense Media supports media literacy for younger audiences, helping families and players understand how digital information spreads.

These efforts dive far beyond journalism. Their principles now guide brand safety, in-game advertising, and community content moderation.

Regulatory and Research Innovation

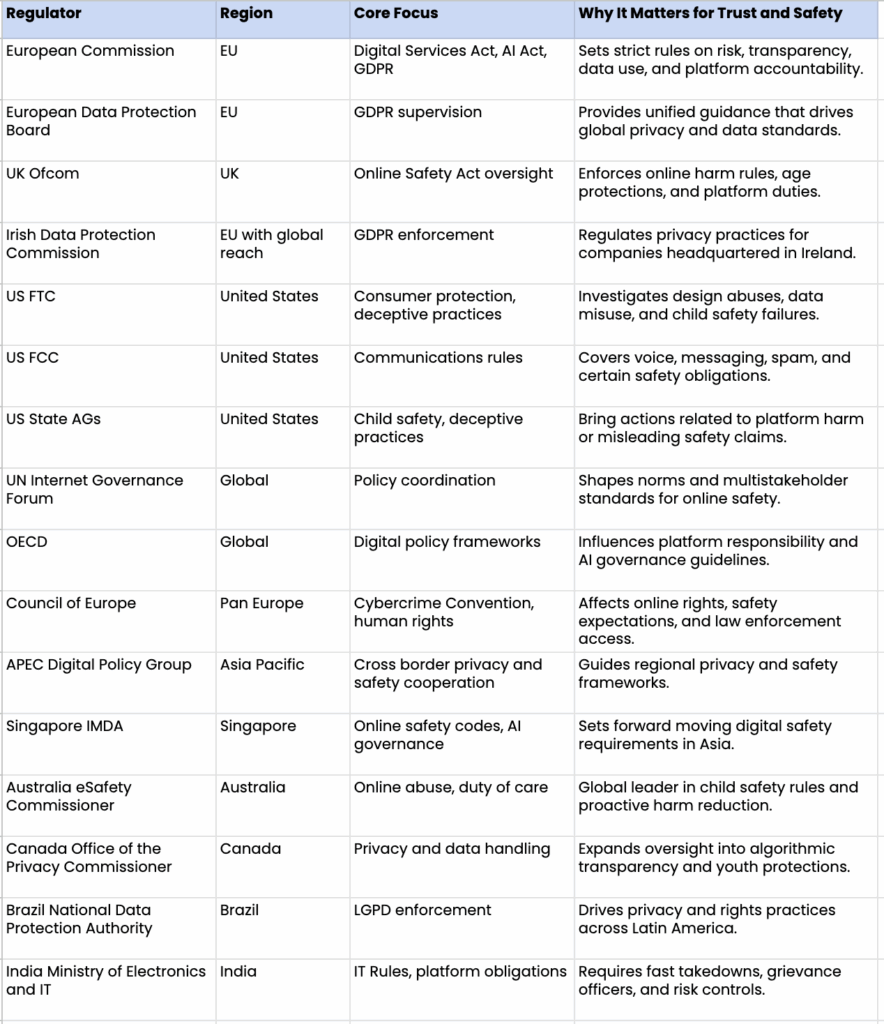

Modern content moderation doesn’t exist in a vacuum. As governments establish digital safety frameworks, collaboration with regulators has become essential. And there are different regulatory bodies that govern different regions, with regulation that is followed internationally:

Programs like the COR Sandbox create safe environments for experimentation, allowing companies to test compliance processes, share data responsibly, and pilot emerging moderation technologies.

These initiatives show how innovation and oversight can coexist, allowing teams to test AI systems or content policies under real-world conditions before full rollout.

Regulatory collaboration will define the next phase of trust and safety. By participating in these sandbox environments, gaming and tech companies demonstrate readiness for evolving compliance landscapes while maintaining the flexibility needed for innovation.

What Comes Next for Gaming and T&S?

As gaming continues to blur the lines between entertainment, community, and commerce, content moderation will define user experience as much as gameplay does. The leaders highlighted above are setting the global standard for ethical practice, accountability, and innovation in trust and safety.

GGWP sees this shift every day. Our AI-powered solutions protect users, strengthen communities, and streamline live ops with proactive moderation across text, voice, reports, and Discord. If you want to respond faster and grow smarter, get in touch.