25 Nov

For decades, the paradigm of reviewing content from one’s community after the fact existed. While this delayed moderation is better than none, a cunning interloper or spirited fan can quickly turn the chill vibes you desired in your community into a more volatile situation.

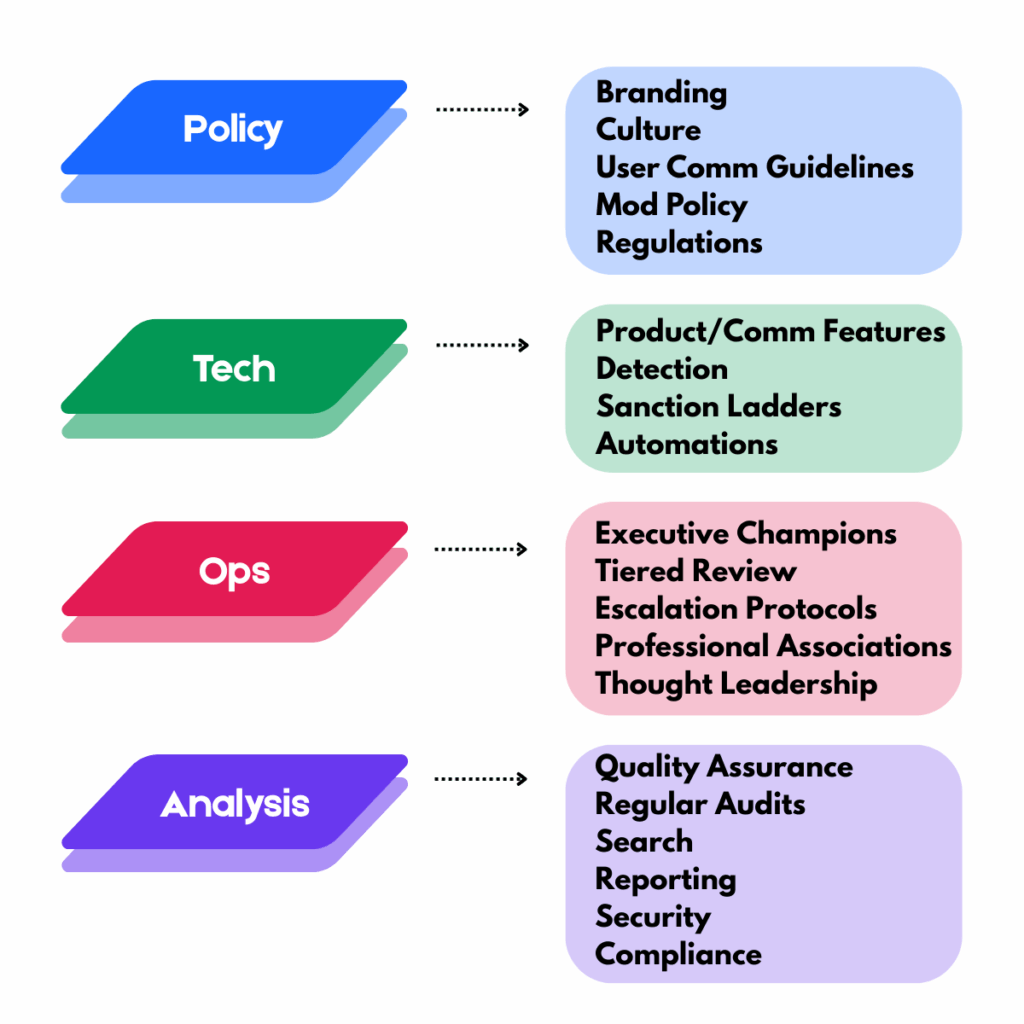

Having a solid Trust & Safety stack solves this, by allowing rule-breakers to be handled almost instantaneously. Since migrating to a more automated system can be daunting – here’s an explainer of some of the more common attributes of a real-time moderation focused Trust & Safety system.

Quick Takeaways

- Real-time moderation uses automation to remove harmful messages as soon as they appear.

- Live Filtering can detect profanity, threats, spam, and evasive language.

- Context-aware models and tools reduce false positives and allow healthy banter to continue.

- Strong moderation supports player retention, trust, and higher downstream spend.

- Effective systems operate at speed without turning moderators into bottlenecks.

What are the Business Benefits from Investing in Trust & Safety

Retention

Harassment and toxicity cause new users to churn early. Real-time moderation keeps conversations on point and welcoming so users continue interacting and eventually convert into long-term spenders.

Protection

Removing slurs, threats, and abuse protects all users, but especially casual audience members who may not be accustomed to more volatile environments.

Fair Play

Real-time moderation can also prevent forms of scams and cheating coordination which can take away from the general flow of the conversation and, at its worst, potentially endanger your users personally or financially.

Brand Risk

Communities with unchecked toxic interactions quickly gain negative reputations. Moderation protects against viral clips,bad press coverage, and audience backlash that can permanently damage trust.

Tools Used to Manage Communities

Communities are even better with great communication and automated tools to enhance the experience.

User Guidelines

The first line of defense against toxicity is to be clear with your audience what your expectations for appropriate content and behavior are. Having these guidelines readily available and written in simple to understand text will go far in establishing the culture you want for your community.

Contextual Messaging

Whenever possible, you should communicate the culture you want in your community. There are many ways to achieve this:

- Through integrated community managers that communicate within the community

- Messaging and signage throughout the experience that reinforce the guidelines and general vibe you are envisioning

- Through notifications to the user before, during and after interactions that let them know when they are on (or off) track

Communication is always the best way to get your goals across to your users.

Automated Filter Engines

These scan text and voice channels in real time using pre-set rules and machine-learning models.

Rule-Based Results

Moderation and Trust & Safety teams design policy flows that determine what happens after each violation. For example, a “Sanction Ladder” could be designed as such:

- 1st violation → message removed + warning

- 2nd violation → auto-mute 30 minutes

- 3rd violation → temporary ban from chat/commenting

Having a toolset, like GGWP, that can be coded to automatically handle these sanctions helps even more, especially with higher volume content.

User Reporting & Appeals Tools

Users can report messages that they feel may also be in violation of the guidelines. This gives them an opportunity to feel involved in the culture building, as well as a chance to help build or understand the rules more, depending on how their report or appeal is received.

Moderator Dashboards

Moderators should have access to accurate views of flagged content, player reputations,incident queues and live sentiment analysis.Modern dashboards, like GGWP’s Community Copilot, include tools for muting, suspensions, and messaging to the players for context.

How a Real-Time Moderation System Works

Using a layered moderation system, like GGWP, allows your team to detect, analyze, and respond to harmful content in seconds.

1. Live Filtering

These are categorized lists of banned words, slurs, and high-risk phrases. When a player types something that matches a trigger, the system automatically blocks, masks, or removes it before others can see it – depending on how your team wants that content handled. Live filtering lists are regularly updated to include new slang, misspellings, or obfuscations players use to try to bypass filters.

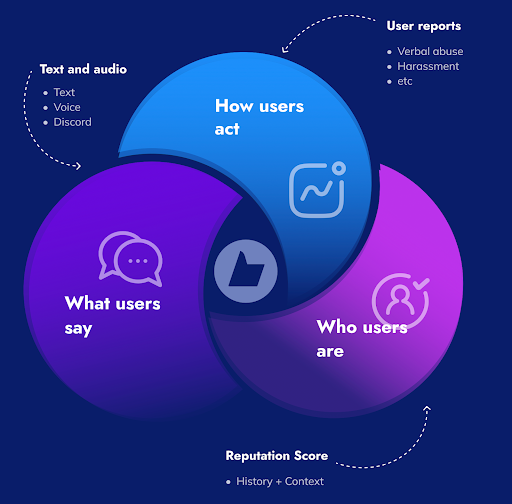

2. Contextual Review

Communities can have cultures that can be passionate and colorful at times – gaming chats can be full of slang and playful trash-talk and political forums can have heated debates.

Contextual models help separate banter from harassment by analyzing tone, prior messages, history with teammates, and escalation patterns.

For example, “you’re trash” between long-term gaming partners might be ignored, while the same message directed at a new player in a public lobby might trigger a warning.

3. Player Reputation

Factoring in a player’s reputation can add yet another layer of accuracy to moderation decisions, especially when you are automating those decisions. GGWP’s Community Copilot can increase or decrease a user’s overall reputation based on the criteria you designate, so that the system can differentiate whether that user is having an off day based on previous interactions, or if there is potential malicious intent behind their actions.

Real-Time Enforcement Actions

When a rule is triggered, a properly calibrated moderation system, like GGWP’s, can react instantly without waiting for a moderator. Some actions that customers have utilized include:

- Block – prevents the message from being delivered

- Mask – replaces text with asterisks or placeholder symbols

- Mute – silences the offending player for a limited period

- Shadowban – allows a player to type while hiding their messages from everyone else

- Notify staff – flags a message for manual review if human intervention is required

Staff moderators can later confirm, overturn, or escalate cases that require a little more oversight.

Understanding Trigger Settings

To run effective real-time moderation, brands rely on a mix of system triggers. These settings control how quickly a message is flagged, what action is taken, and how the system adapts to different types of behavior. The most common categories include:

Severity Levels

Some systems use graded categories:

- Tier 1 (spam, low-risk trash-talk): warn or ignore

- Tier 2 (harassment, targeted insults): delete + mute

- Tier 3 (slurs, threats): instant mute/ban, escalate

Cooldown Windows

Prevents flood messaging where users send dozens of messages per minute.

Bypass Detection

Trained to catch creative re-spellings (e.g., “a$$”, “1diot”, or foreign-character swaps).

Custom Allow and Blocklists

Many communities develop their own terminology and slang – these can be added in by way of allowlists and blocklists. Doing this reduces false positives so common words and phrases you have deemed ok aren’t automatically punished and, conversely, brand terms can be automatically allowed.

Are You Leveraging Your User’s Real-Time Sentiment?

Besides enforcement, incorporating real-time sentiment data can be a great proactive measure in monitoring your community. By looking at positivity or negativity trends in messages over time, brands can understand:

- Feature feedback (“ this bug sucks” trending → product issue)

- Meta shifts (loving this” → brand culture)

- Churn warnings (screen frozen again” messages rising may signal drop-off)

These insights help product and live-ops teams make better decisions before issues are brought to light on social media or review platforms.

Is Trust & Safety Changing?

Brands are moving toward unified moderation pipelines where text, voice, and visual content (profile pics, memes, screenshots) are filtered in the same real-time flow and then aggregated to give a holistic picture of each user. Smart enforcement ladders are replacing one-size-fits-all bans. Moderation is also becoming a closed loop with content and product dev teams.

Conversation tone helps communities make decisions, identifies broken systems, and highlights high-friction product areas. Community feedback shouldn’t just be scraped from the depths of Reddit or Youtube; it starts inside your community.

If your current chat moderation can’t keep up, GGWP’s Community Copilot can help. Our real-time tools detect, filter, and escalate harmful messages across voice, chat, and Discord with policy-aligned precision. Contact us to build a safer, more engaging community that keeps your players coming back.