09 Nov

Game Changer: Impact of Chat Sanctions on Toxicity

Recently, Omeda Studios shared results around the impact GGWP’s AI-based chat moderation had on reducing toxic behavior within Predecessor, their early-access AAA MOBA. We are thrilled with these findings, further detailed in a case study published by GamesBeat. In this post, we want to dive into the numbers and GGWP’s methodology, to provide more context on how our solutions help reduce the recurrence of toxic behaviors within games.

The Challenge

Toxic behavior in online games is not just a minor inconvenience. The Anti-Defamation League reports that up to 81% of adults who play online games have experienced some form of harassment, with a significant portion of that abuse targeted towards specific groups and people based on identity factors. And additional studies have shown that negative interactions can significantly impact player retention and satisfaction. Given the sheer number of messages exchanged daily, it is nearly impossible to manually moderate and address every single message. The difficulty lies not only in processing the large volume of messages but also in responding quickly and effectively enough to deter subsequent toxic behavior.

In recent years, there has been a paradigm shift in how games approach such community moderation. Previously many games operated under the belief that they could improve player experience by simply kicking out or banning the so-called “bad actors.” However, research has shown that only around 5% of players are consistently disruptive, which means that the remaining players only get tilted once in a while. Because of this, an increasing number of games are now focusing on educating players on the repercussions of toxic behavior.

This is the main purpose of our moderation tool as well: we want to educate and warn players of their toxic behavior while nudging them towards better conduct in the future. Our tool gives players who might just be having a bad day the opportunity to cool down through session mutes, while issuing longer, more stringent penalties to repeat offenders, making it clear that persistent toxicity will not be tolerated.

The Solution

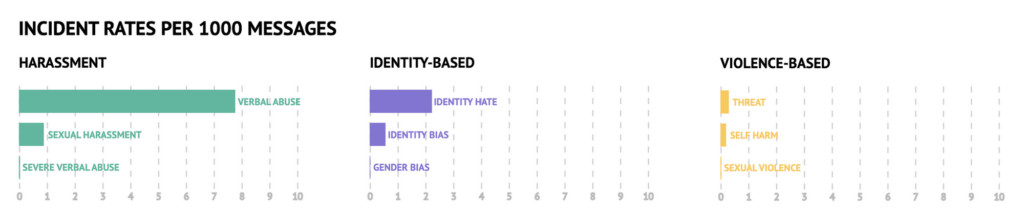

Our approach to chat moderation is threefold:

- Live Filtering: Chat messages are processed instantly so that offensive words and slurs are filtered out in real-time, as a first line of defense against offensive messages.

- Advanced Detection Models: Beyond just individual words and phrases, we deploy a variety of custom language models, which run within seconds, to flag different types of toxic messages that contain more nuanced identity hate, threats, verbal abuse, harassment, etc.

- Behavior-Based Sanctions: At the player level, we look at historical behavioral patterns and current session behavior in order to apply appropriate sanctions. These sanctions can range from warnings (session mutes) for those caught up in the heat of the moment, to harsher multi-day mutes for players who have demonstrated a history of toxic behavior.

The Outcome

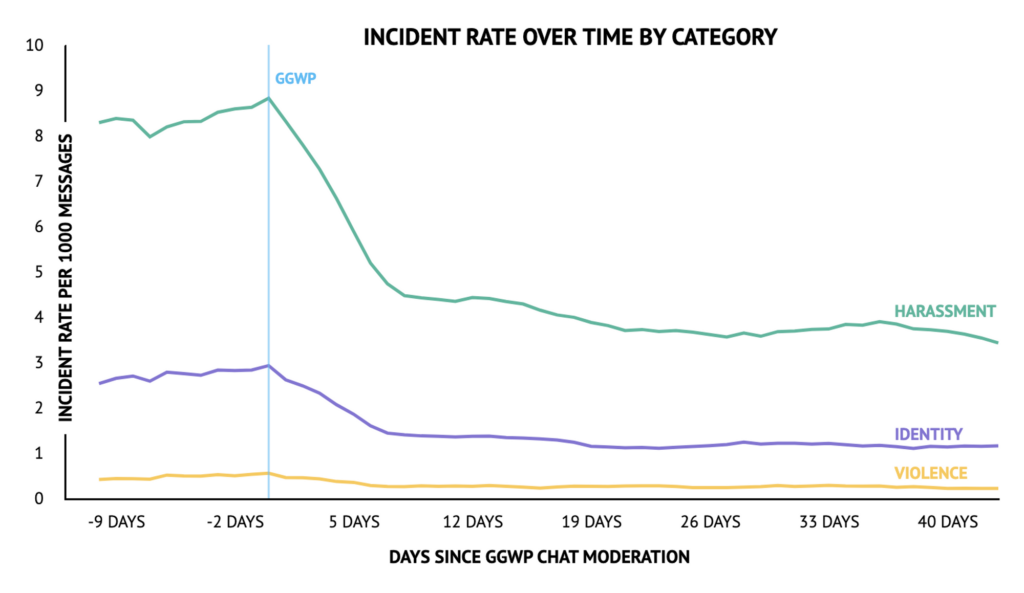

Since integrating our moderation tool, Predecessor has experienced a significant decrease in the rate of offensive chat messages sent, as well as a decline in repeat infractions from the subset of players who have received system warnings and sanctions.

Overall, there has been a 35.6% decrease in toxic messages sent per game session. In addition, 56.3% of actively chatting players have sent a lower rate of offensive messages since the auto-moderation system was implemented.

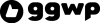

There has also been a substantial decrease in the rate of different types of toxic incidents:

- 58.0% decrease in identity-related incidents

- 55.8% decrease in violence-related incidents

- 58.6% decrease in harassment incidents

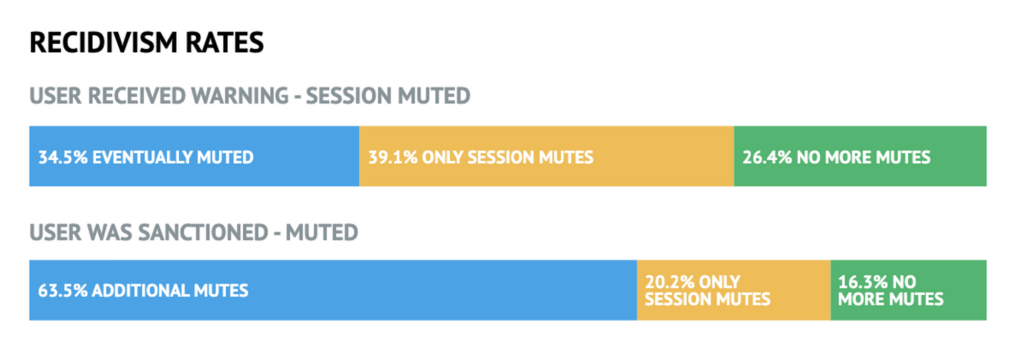

In addition, we have also noticed that sanctions and warnings have been effective so far in reducing repeat chat violations among players:

- 65.5% of players who have received warnings have not received a more severe, longer-term sanction

- 41.1% of players who were sanctioned for 1 or more days have not committed another serious chat offense

Simple warnings (e.g. session mutes), designed to de-escalate occasional heated moments, have been effective in deterring future misconduct in a majority of players. In fact, around 65% of players who received such warnings refrained from further, more serious chat infractions and avoided consistent toxic behavior warranting harsher sanctions.

Players who consistently exhibited toxicity and therefore ended up reaching more severe sanctions frequently incurred additional sanctions. Imposing these repeated sanctions on players with more long-term patterns of bad behavior has been instrumental in preventing others from falling victim to their toxic behavior.

Conclusion

For a long time, game developers have struggled with the whack-a-mole challenge of curbing toxicity and longed for a more proactive approach that encourages users to behave more pro-socially while keeping them in the game. The key takeaway from these results is that leveraging proactive solutions like GGWP doesn’t just block offensive chat in real-time, but also serves as a strong deterrent that prompts players to think twice before sending offensive messages and reduces the recurrence of repeated chat violations from players. These published results from Omeda are very exciting as we show what’s possible when developers commit to fostering a more inclusive and respectful environment within online gaming. We are looking forward to seeing what happens as more gaming communities adopt this more proactive approach.