18 Sep

More often than not, “community management” gets lumped in with reactive moderation. Someone posts harmful content, a team member removes it, and the process repeats in an endless loop.

But when studios see community management as a strategic asset (culture building and long-term engagement) they see the results they’ve been looking for.

It affects retention and churn rates, boosts creator trust, and encourages healthy communities.

So, how do you make the shift from basic moderation to a culture you’re proud of? Let’s dive in.

Quick Takeaways:

- Community management is part of your brands’s long-term growth.

- Thoughtful guidelines help set the tone early and build the trust players are looking for.

- Automated tools are great, AND they can be used to help guide your culture, not just flag content.

- Creators are more likely to invest in your ecosystem when they feel protected.

- Cross-team collaboration makes everything more effective.

What Community Management Looks Like Today

According to Deloitte, as of February 2024, 83 million of the 110 million U.S. online multiplayer gamers experienced harassment in their online games over the past 6 months. That’s over 75% – and we see similar rates in non-gaming communities as well.

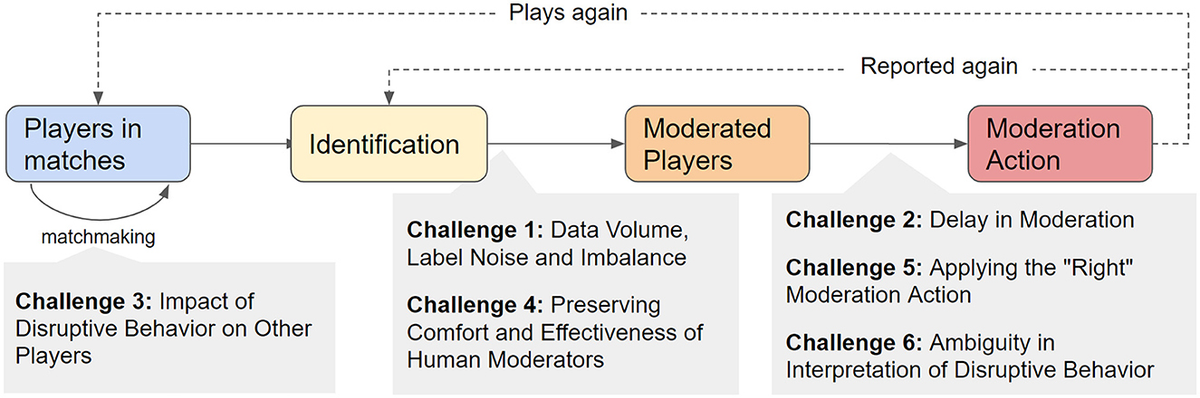

Because of that, community management is still a major job for most brands. It’s also one that’s incredibly important for the protection of everyone involved. However, manually taking care of issues as they come isn’t necessarily the most effective way to handle infractions.

Moderator teams sometimes feel stuck in a loop, spending most of their time putting out fires instead of building and maintaining an excellent community.

They might catch harmful behavior after it spreads, but that isn’t sustainable for moderators or users. In this reactive dynamic, players may not know what’s expected of them until it’s too late, and moderators are left scrambling to fix situations that could’ve been prevented with clearer boundaries, better tools, or stronger alignment across the board.

Creating Guidelines That Actually Work

Good community culture starts with clear, fair, and thoughtful guidelines.

If your policies are vague or outdated, or written without considering the community, you’ll have a lot of adjustments to make. People won’t follow the rules, and your moderation team won’t have a solid foundation to work from. That sets everyone up for failure. What helps:

- Be specific about what’s not allowed and why.

- Share real examples when possible (players want to know what lines they shouldn’t cross).

- Bring in diverse voices when you create or revise community guidelines.

- Make sure creators are part of the conversation—they often have their own communities to manage, too.

You don’t have to (and shouldn’t) write a 10-page handbook, just be intentional.

Automate the Right Way

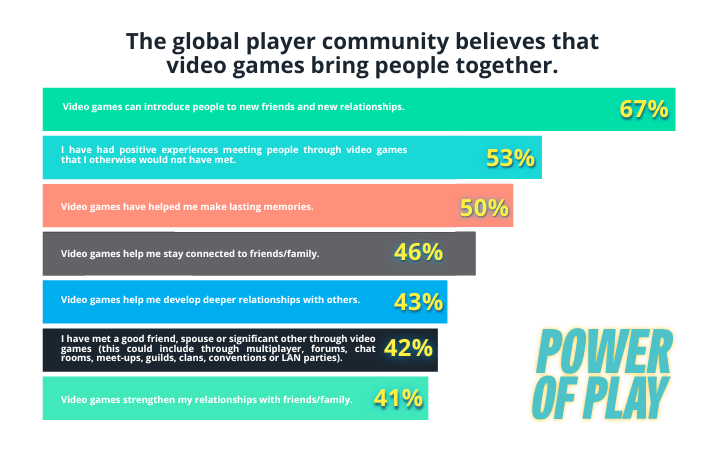

Communities can be massive, and for good reason. Games help people make friends, strengthen bonds with current ones, and improve overall happiness.

But many are too big to manage manually at scale. If that’s the case for yours, automation is going to significantly help.

Automation isn’t just the magic potion, though. If all your system does is remove flagged words, it might miss the bigger picture (or worse, overcorrect and hurt your credibility). Studios need tools that:

- Understand context (especially with voice)

- Escalate more nuanced issues to a human when needed

- Communicate clearly to users when actions are taken

Keep in mind that automation is a support tool, not a replacement. It should extend your values, not just enforce rules blindly.

Want to Support Creators? Build a Safe Space

Creators are loyal to communities they can trust to support them. They aren’t just meme machines; they’re building audiences, investing time, and creating income streams tied to your game. If they don’t feel protected, they’ll find another community to join. Creators want:

- Quick response times when issues pop up

- Transparent enforcement so creators understand what happened and why

- A culture that values creativity, not just content volume

Blocking harassment is only a small part of safety. Creators need to feel that you respect them.

Community Isn’t Just One Team’s Job

It takes a village, right? For culture-building to work, studios need total alignment across teams.

Community managers/Trust & Safety teams can’t do it alone. They need support from, marketing, player support, and even product and engineering teams. Everyone should have shared goals and know what the game plan is and how to move it forward.

Trust & Safety is a major factor here. It defines the enforcement standards, handles escalations, and makes sure moderation practices reflect both the community’s values and legal requirements.

Staying compliant with regulations like the DSA, OSA and COPPA avoids penalties, reduces risk, builds credibility, and protects players. When everyone works from the same standards, moderation feels more consistent, fair, and defensible. That means:

- Regular check-ins between teams

- Clear escalation processes

- Metrics that measure not just negative behavior, but positive engagement, too

When internal teams work together, everything flows smoother. It’s easier to spot trends, respond quickly, and build the kind of community players want to be part of.

Most Community Management Tools Are Missing Something

Again, most tools in the moderation space focus on flagging and removing content.

Valuable? Yes. Reactive? Also yes, and that isn’t setting you up for success in the long run. Most major community tools are ignoring one key component of success: alignment.

They simply don’t prioritize detection, enforcement, and culture at the same time. The future of community management isn’t about “what to block”, but about “what kind of community do we want to build and how can we bring that to fruition?”

What Good Culture Management Looks Like

Let’s say someone makes a borderline comment in a public voice channel. Maybe it’s not technically against the rules, but it makes other players uncomfortable.

A great system won’t just ignore it or auto-ban the speaker, it protects the tone you want your community to have. Here’s what an ideal workflow might look like:

- The comment is flagged for review (automatically or by another player).

- A contextually aware moderation system holistically reviews the comment in context with the rest of the conversation and the player’s reputation.

- Given all the information, if needed:

- A short, respectful message is sent explaining community expectations.

- A temporary timeout is issued with a clear explanation.

- Feedback about the comment is internalized to help refine future moderation efforts.

Sure, this can happen with a human team, but once you have solid processes and guidelines, all of this can happen in an automated fashion if your volumes require it.

Plus, players usually engage in banter and a healthy level of trash talk. If auto-banning is an issue, it may make users feel like they can’t fully connect with their friends.

Measuring Your Progress

If you want to know whether your community strategy is working or not, don’t just tally up bans. Your Community KPIs should include disciplinary actions, but your team should also look for:

- Creator retention and satisfaction

- Player engagement over time

- Fewer repeat violations

- Sentiment trends in community feedback

- Growth in positive player interactions

When culture and morale improves, so does player behavior. And that creates more room for creativity, collaboration, and lasting success.

Prioritizing Community Culture Is Easier Than Ever

Community management is easy when you’re just trying to tidy up, but creating a culture that users are proud of? It’s a worthy investment.

The brands that encourage a strong culture early on will see gains over the long haul. Not just in numbers, but in how people talk about your product, how creators feel when they log on, and in how new community members are welcomed when they join.

If you aren’t getting the community moderation results you want, GGWP has solutions for you. Our suite of moderation and analytics tools are designed to help bridge the trust gap between creators and users. Contact us to learn how we can make your community safer and more inclusive!