24 Sep

Voice moderation isn’t a “nice-to-have” feature anymore. Toxic voice chat has been part of multiplayer gaming for decades. For some, it gets brushed off as “just the culture.” But normalizing extreme trash talk, harassment, and verbal abuse in online matches comes at a cost. When left unchecked, it hurts player retention, damages brand reputation, and even impacts long-term revenue growth for studios.

Voice Moderation is an asset for any developer looking to create safer, more welcoming gaming spaces while also protecting their mission and image. The technology has advanced to the point where games can now monitor, assess, and act on voice communication at scale (without slowing down gameplay or requiring overworked human moderation teams).

Quick Takeaways

- Voice moderation helps protect player experience and company revenue by reducing toxicity.

- “Toxic culture” in games leads to churn, negative publicity, and fewer long-term users.

- AI-powered tools analyze speech in real time, identifying harmful behavior before it escalates.

- Combining voice, in-game chat, Discord, and user reports creates a more reliable view of behavior.

- More data points mean more precise moderation decisions and easier automation of sanctions.

Why “Toxic Culture” Hurts Gaming Businesses

Multiplayer games flourish when players are (and stay) invested. Toxic voice chat creates a ripple effect that’s hard to come back from.

A reported 83 million online multiplayer gamers have experienced hate and harassment. Players who consistently experience that abuse are less likely to keep playing, less likely to spend money in-game, and more likely to share negative feedback about the community.

Developers who try to justify toxic environments under the excuse of “that’s just gaming culture” underestimate the business consequences that are inevitably lurking around the corner.

While hardcore players might accept (or even embrace) extreme trash talk, many others leave quietly, draining the overall player base. Revenue loss comes from multiple angles:

- Player churn: New or casual players quit faster when exposed to harassment.

- Lower in-game spending: Players who feel unsafe or unwelcome are less inclined to invest in cosmetics, expansions, or upgrades.

- Brand reputation: Toxic culture leads to negative press and pushes parents, sponsors, and advertisers away from supporting games.

- Competitive disadvantage: Studios that adopt voice moderation attract a larger audience, including groups who may otherwise avoid online play.

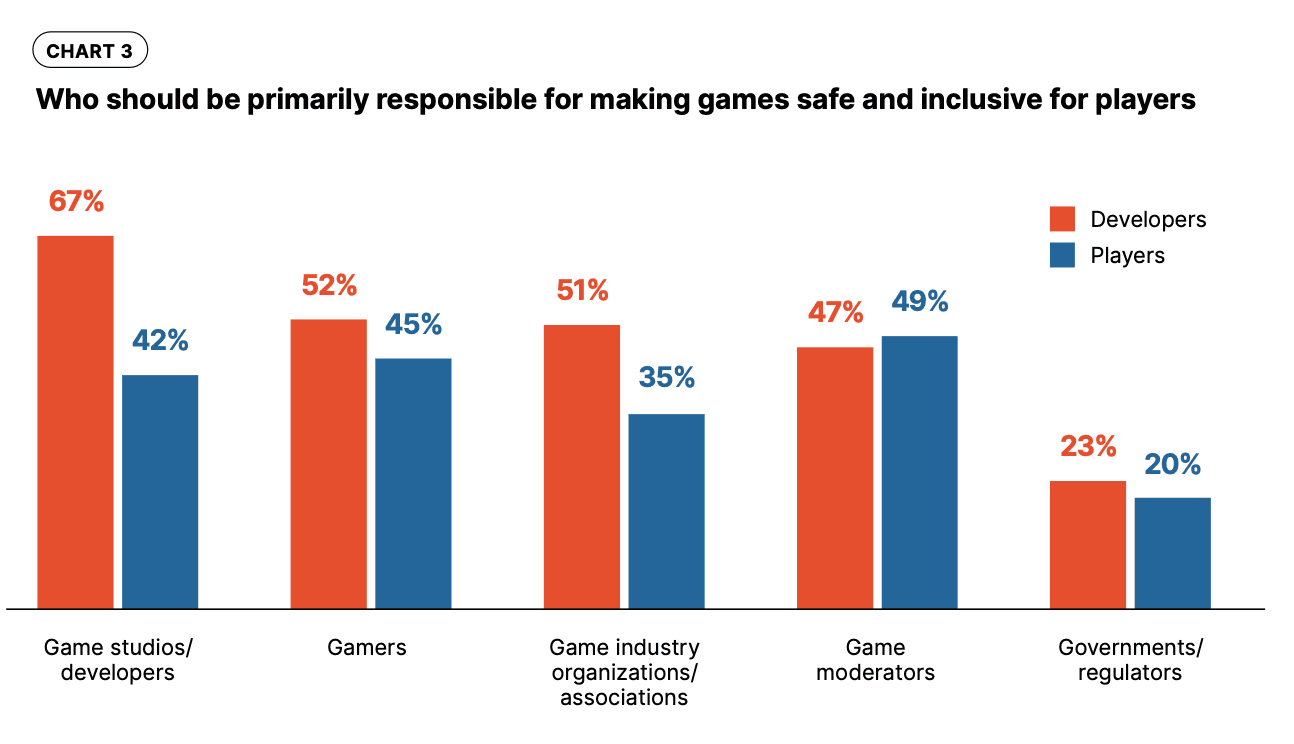

Voice moderation doesn’t just improve community well-being. It’s directly tied to growth and sustainability in an incredibly competitive industry. Everyone should do their part, including studios, developers, mods, and gamers themselves.

How Voice Moderation Works

Voice moderation uses AI-powered tools to process voice communication in real time. These tools listen for specific indicators of sexual harassment, hate speech, self harm, threats, bullying, or other harmful language.

Once flagged, the system can log the incident, score its severity, and send alerts for review (or automatically apply penalties when the behavior is obvious).

Unlike older methods that relied only on player reports, voice moderation adds a proactive layer of protection that every platform needs. It removes the player’s burden to call out every instance of toxic or borderline behavior. Some of its main functions include:

- Speech recognition: Converting spoken words into text for analysis.

- Context analysis: Differentiating between competitive banter and harmful intent.

- Severity scoring: Ranking how serious a violation is, from minor trash talk to serious threats.

- Real-time alerts: Flagging incidents as they happen for immediate response.

- Automated enforcement: Issuing warnings, mutes, or suspensions when rules are clearly broken.

By incorporating these processes directly into multiplayer systems, developers create smoother, safer experiences that players greatly appreciate.

Why Combining Voice, Text, Discord, and Reports is Powerful

One of the biggest challenges in moderation is context.

Voice chat alone doesn’t always tell the whole story. What sounds like harassment in one situation might just be friends joking around. Likewise, a single report might not be enough evidence for fair enforcement.

That’s why combining multiple data points is crucial. By integrating voice chat, in-game text chat, external platforms like Discord, and user-submitted reports, studios get a much clearer picture of player behavior. Here’s why you should care:

- Cross-verification: If toxic behavior shows up in both voice and chat logs, it strengthens the case for action.

- Pattern recognition: A player might avoid detection in voice chat but repeatedly show toxic behavior in text or through reports.

- Contextual accuracy: Voice plus text together can clarify intent, reducing false positives.

- Stronger automation: The more data points the system uses, the more confidently it can automate sanctions without requiring manual review.

- Community trust: Players feel safer when moderation decisions are based on a complete picture, not isolated incidents.

For developers, context improves accuracy, reduces workload for human moderators, and speeds up enforcement. Everyone’s happy.

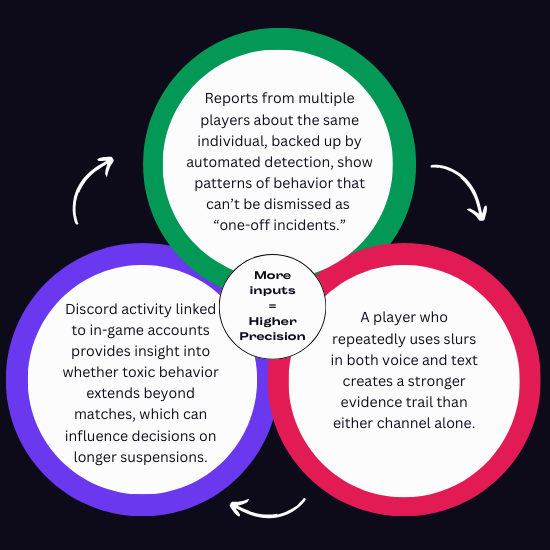

Why More Data Points Improve Results

Moderation is similar to an equation: the more inputs you have, the more accurate the output becomes. Voice moderation is helpful on its own, but when combined with text logs, Discord conversations, and reports, it reaches a new level of precision. For example:

- A player who repeatedly uses slurs in both voice and text creates a stronger evidence trail than either channel alone.

- Reports from multiple players about the same individual, backed up by automated detection, show patterns of behavior that can’t be dismissed as “one-off incidents.”

- Discord activity linked to in-game accounts provides insight into whether toxic behavior extends beyond matches, which can influence decisions on longer suspensions.

The combination of data points makes it harder for harmful players to slip through the cracks and reduces the chance of punishing someone unfairly.

Benefits for Players and Developers

When implemented correctly, voice moderation supported by multiple data streams creates several benefits for both players and developers.

For players:

- Safer, more enjoyable environments

- Greater trust in moderation systems

- Reduced exposure to harassment and abuse

- Stronger sense of community and inclusivity

For developers:

- Lower churn and higher retention rates

- More in-game spending as players feel safe investing time and money

- Stronger brand reputation in both gaming and mainstream media

- Competitive advantage in attracting new players and expanding audiences

Remember, negative behavior drives players away. Voice moderation helps keep them engaged, which directly impacts revenue and growth.

Building a Sustainable Moderation System

Creating an effective voice moderation strategy requires some careful planning. Unfortunately, you’re not just installing software (if only life were that easy).

Instead, you’re building systems that are fair, trustworthy, and scalable. You’ll need to incorporate:

- Transparency: Players need to know what behaviors are monitored and what consequences exist.

- Privacy: Systems must respect user privacy while still protecting community health.

- Customization: Moderation should reflect each game’s culture and audience.

- Automation with oversight: Automated tools should handle obvious cases, with humans reviewing more complex situations.

- Continuous updates: Language and behavior evolve quickly, so moderation models must be updated regularly.

Your goal shouldn’t be to eliminate competitive banter or passionate communication. It should be to filter out the harassment and abuse that turn people away from your platform (without sacrificing your spare time to doing it on your own).

The Future of Voice Moderation in Gaming

In 2025, voice moderation is a main feature of multiplayer design. As gaming communities expand and diversify even more, developers who fail to address toxic culture risk losing much more than players.

By using voice moderation with chat, Discord, and user reports, studios create a more accurate, fair, and scalable system for protecting players. The result is healthier communities, stronger engagement, and a more sustainable business model.

Excellent audio-based moderation keeps multiplayer games safe, fair, and welcoming. If you need help cultivating a positive gaming environment, GGWP’s versatile voice moderation tool makes it easier to reduce toxicity and protect your players. Contact us today to build a safer, more engaging community.