12 Nov

For decades, online communities have relied on reactive moderation- a process where action is taken only after harmful behavior is reported. While this approach prevents some damage, it often allows abuse to spread before intervention occurs, leaving users exposed and community health at risk.Today, expectations have evolved. Whether on a social platform, live-streaming channel, or online forum, audiences expect brands to provide safe, inclusive environments. Modern moderation tools are now proactive – combining automation, contextual understanding, and scalable enforcement to protect users while reducing the manual workload on moderation teams.

Below are the defining traits of an effective, modern moderation strategy; one designed to build healthier, more resilient communities across any digital platform.

Quick Takeaways

- Proactive enforcement handles violations as they happen, keeping communities safe without heavy manual work.

- Context-aware tools reduce false positives by looking at user history, reports, and conversation flow.

- Automated sanctions scale with community size and free moderators to focus on complex cases.

- Global language support protects communities across regions and user bases.

- User reporting, appeals, and dashboards create transparency and help moderators make informed decisions.

1. Proactive Protection

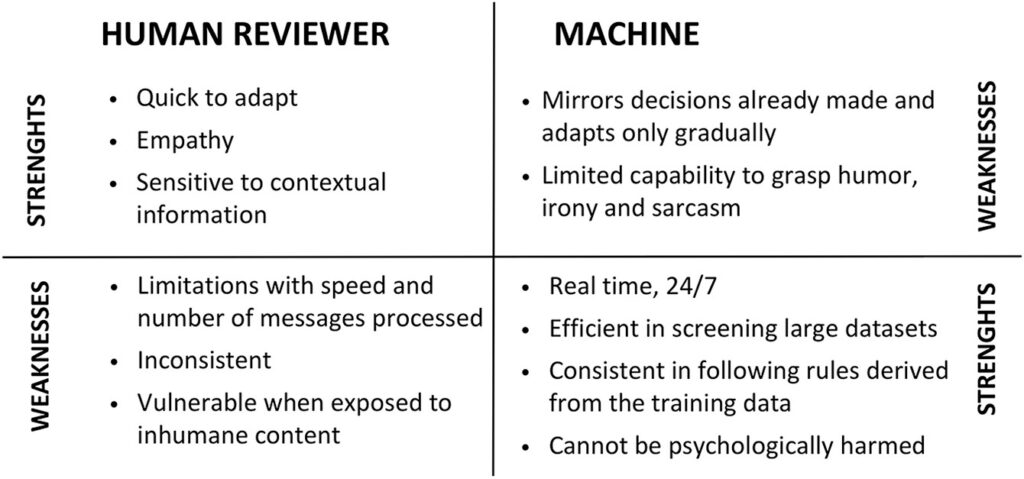

Strong moderation systems don’t wait for reports – they act in real time. Automated rules, powered by AI and natural language processing, detect and enforce community standards instantly. These tools can block or mask harmful content, issue warnings, or apply temporary suspensions before harm escalates.

Key Benefits:

- Customizable sanction rules reflect each community’s culture.

- Real-time enforcement limits abuse exposure.

- Consistent standards set clear user expectations.

2. Context-Sensitive Judgment

Not every incident is clear cut. Effective moderation accounts for context. For example, understanding conversation flow, tone, and user history before making enforcement decisions. Contextual tools prevent over-correction and ensure fairness.

Considerations include:

- Reviewing conversation threads for intent and tone.

- Factoring in user reputation and prior behavior.

- Avoiding unnecessary penalties for isolated incidents.

3. Smart Automation for Scale

Manual review doesn’t scale, but automation does. Intelligent systems identify patterns of abuse, prioritize severity, and handle repetitive moderation tasks so human moderators can focus on complex or nuanced cases.

Why it matters:

- Reduces operational costs.

- Adapts dynamically as community size fluctuates.

- Supports both small and global user bases efficiently.

4. Multi-Channel Coverage

Communities communicate through text, images, and voice. A modern moderation platform must analyze all of them.

Comprehensive coverage includes:

- Text and voice moderation.

- User-generated image and video review.

- Cross-platform incident tracking for consistent enforcement.

5. Enforcement That Fits the Violation

Detection alone will never be enough. Enforcement must match context and severity. Modern moderation systems apply flexible sanctions, such as

- Warnings for first-time violations.

- Temporary mutes or suspensions for repeat offenses

- Permanent bans for severe or ongoing behavior.

Customize your brand’s sanction ladder to reflect your community’s culture and expectations. This flexibility reinforces fairness and maintains user trust.

6. Human Oversight and Transparency

Automation is powerful but not infallible. Effective systems empower human moderators with intuitive dashboards showing:

- Full conversation history

- User reputation and infraction timelines

- Real-time queues for review.

- Built-in appeals workflows.

This combination ensures both speed and judgment, which is essential for maintaining user confidence.

7. Reputation Systems

A single incident doesn’t define a user. Reputation systems aggregate conduct over time, rewarding consistent positive participation and flagging repeat violations.These insights can:

- Reward constructive contributors.

- Flag chronic offenders early.

- Improve fairness and consistency in enforcement.

9. Global Language Support

Community moderation doesn’t stop at language barriers. Multilingual moderation ensures global inclusivity and consistent protection. Platforms that support major languages, from English and Spanish to Arabic, Chinese, and beyond, prevent gaps that bad actors might exploit.

10. Actionable Insights

Moderation is not only about removing harmful content. It also can drive smarter business decisions. Monitoring sentiment, frequency of incidents, and behavior trends reveals valuable insights into:

- Community health and engagement.

- Product or feature pain points.

- Timing or context behind spikes in toxicity.

These insights help community managers, marketers, and safety teams align operational goals with user wellbeing.

Incident Categories Many Communities Screen

- Verbal abuse: Hostile, insulting and demeaning language directed at others.

- Self-harm: Content that encourages, glorifies, or suggests self-injury, suicide, or other forms of self-destructive behavior.

- Sexual Content: language containing explicit sexual comments, innuendos, or references that are inappropriate or offensive.

- Profanity: messages that use obscene, vulgar or inappropriate language, or swear words..

- Violence: language that encourages, glorifies, or threatens physical harm or destruction towards individuals or groups.

- Identity Hate: messages that use discriminatory language towards a person or group based on their religion, ethnicity, nationality, race, gender, sexual orientation or other identity factors.

- Spam/Link Sharing: repetitive or excessive messages or external links that disrupt the experience for others.

- Drugs/Alcohol: references to illegal substances, drug use, or drug culture that are inappropriate for the environment.

- PII: messages that contain personally identifiable information (PII).

The ROI of Effective Moderation

Excellent moderation is more than just compliance, it’s a growth strategy

The payoffs:

- Higher retention and engagement

- Stronger Brand Reputation

- Cost efficiency through automation

- Safer, more inclusive spaces that attract new users

Are Your Moderation Tools Meeting Player Expectations?

Yesterday’s reactive approach doesn’t work for today’s communities. The best platforms act in real time, apply contextual intelligence, and scale effortlessly.

At GGWP, we help platforms evolve from reactive to proactive. Our AI-powered solutions handle text, voice, and community moderation with accuracy and transparency — so your team can focus on growth, not damage control.